Imagine this: You walk into a room, and your device already knows what you want to say. No swiping. No speaking. No typing.

Just… intention.

This is not a fantasy—it’s the next generation of communication, and it’s coming faster than anyone expected. Welcome to a world of no touch, no talk—where technology reads your thoughts, gestures, and emotions to help you connect without ever making a sound.

If that sounds revolutionary—or terrifying—you’re not alone. But this silent, invisible wave of communication is already being developed in labs across the globe. And soon, you won’t even need words to get your message across.

Let’s break down how communication without speaking or touching is about to flip the tech world on its head—and why it could redefine what it means to connect.

🧠 What Does “No Touch, No Talk” Mean?

The phrase refers to a new class of communication devices and systems that don’t require physical interaction or spoken language to function.

Instead, they rely on:

- Neural signals (your thoughts)

- Facial microexpressions

- Body language

- Eye movements

- Biometric feedback (heart rate, skin conductivity, emotion)

You don’t type. You don’t speak. You simply think or move—and the device translates that into meaningful communication.

It’s invisible communication—and it’s not sci-fi anymore.

🧬 The Core Tech Powering This Revolution

Let’s look at what’s making this possible:

1. Brain-Computer Interfaces (BCIs)

Think Neuralink, NextMind, and Synchron. These devices read neural activity via implants or external sensors, converting thoughts into digital commands.

Example: You think “Call Mom”—your device dials her number. No words. No touch.

2. EMG Signal Readers

Companies like CTRL-labs are developing wristbands that read electrical signals from muscles, even if you don’t move visibly. It’s communication by intent, not motion.

3. Eye Tracking + Micro-Expressions

Advanced eye-tracking tools interpret where you look, how your pupils dilate, and the tiniest facial twitches to predict emotion and intent.

4. Emotion AI + Biometrics

Using heart rate, breathing patterns, and skin response, devices can detect your emotional state—and adjust communication accordingly.

💡 Real-Life Examples You Won’t Believe

These aren’t future fantasies—they’re already being prototyped or sold:

- Smart Glasses that send messages by blinking patterns and focusing gaze

- Silent Speech Interfaces that detect mouth muscle movement—even when you whisper silently

- VR Headsets that let you “talk” in games using thoughts and eye movements

- Assistive Tech for ALS patients that lets them type using just their brainwaves

- Military Devices that allow squads to coordinate silently during operations

You’re already living in a world that’s learning to listen without ears and understand without words.

🤯 Why the Shift? Why Now?

1. Voice Fatigue and Privacy Concerns

Voice assistants are everywhere—but talking aloud isn’t always ideal. It’s slow, public, and vulnerable to eavesdropping. Thought-based or gesture-based tech offers privacy and speed.

2. Hands-Free Society

People are multitasking more than ever. Whether you’re driving, cooking, or wearing AR glasses, touchless input makes sense.

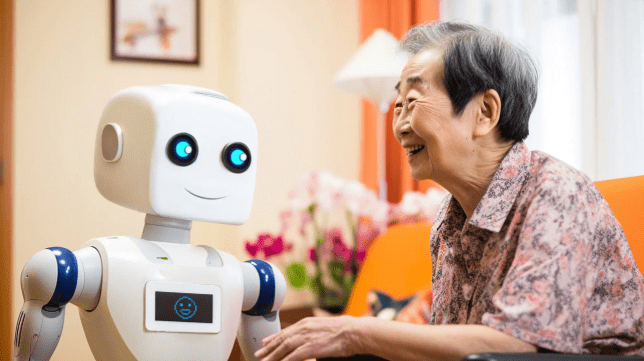

3. Accessibility Breakthroughs

Millions of people with speech impairments, paralysis, or neurological disorders could finally communicate freely—with their mind.

4. Efficiency

Neural and intent-based systems cut through noise. You don’t have to say or explain—the system already knows.

😨 But What Could Go Wrong?

Of course, this kind of powerful, invasive technology raises some serious red flags.

🔐 Data Privacy

What happens when your thoughts are the data? Could companies record your mental patterns? Could ads one day be injected into your subconscious?

🧠 Cognitive Overload

Constant brain-based input could overwhelm users. You think thousands of thoughts per hour—should your devices respond to all of them?

🤖 Loss of Control

If your device “predicts” your needs, could it misinterpret your intention? What if it acts before you change your mind?

🦾 Digital Dependence

As interfaces become more passive and brain-integrated, we risk losing manual control—and maybe even forgetting how to communicate the “old-fashioned” way.

🔍 Who’s Leading the Charge?

This is no fringe field. Major players are investing heavily:

- Elon Musk’s Neuralink – Already tested BCI chips in humans

- Meta – Developing wrist-based EMG input for AR interfaces

- Apple – Patents suggest silent control tech for future wearables

- DARPA – Funds BCI research for battlefield communication

- OpenBCI – Building open-source neural devices for mind-controlled software

Startups like Cogwear, Emotiv, and Neurable are pushing boundaries, creating mind-reading headsets you can buy now.

🌐 Communication Will Never Be the Same

Here’s what the future could look like in just a few years:

- “Silent Zoom” Meetings: Participants communicate with facial gestures and neural input—no one speaks aloud

- “Ghost Messaging”: You send a thought or emotion directly to another person’s device (or even brain)

- AI Translators: Instantly convert emotional intention into another language—or another mode of interaction

- Emotionally Adaptive Ads: Brands send you offers only when your brain is primed to buy

It sounds crazy—until you realize it’s already happening, just not mainstream yet.

✅ Conclusion: Are You Ready to Stop Talking?

For centuries, communication has been physical, verbal, or written. But we’re entering a new realm—a place where technology fades, and intention becomes language.

In this world:

- You won’t touch.

- You won’t talk.

- You’ll just connect.

The question isn’t if this will happen.

It’s whether we’ll control it… or be controlled by it.

Because once the world hears your thoughts without words—there’s no going back.

❓FAQs: The Future of Communication Devices

Q1: Are thought-based devices real today?

Yes. Companies like Neuralink and Emotiv are already shipping brain-signal reading devices that can control cursors, type, or launch commands.

Q2: How accurate are “no talk” communication tools?

Still improving—but some EMG wristbands and BCIs already reach 90%+ intent recognition with training.

Q3: Are these devices safe?

Most are non-invasive and rely on surface signals. Implants carry risks but offer higher fidelity.

Q4: Could someone read my mind without consent?

Not yet. Current tech reads intentional thoughts—not your subconscious or random ideas. But future misuse is a concern.

Q5: Will speaking and typing disappear?

Not fully. But in many contexts—AR/VR, disability, stealth work, remote ops—they could be replaced entirely.

Q6: What’s the biggest benefit of this tech?

Speed, accessibility, and emotional nuance. You can express intent or emotion instantly—even non-verbally.

Q7: What industries will adopt this first?

Healthcare, defense, gaming, accessibility tech, and AR/VR environments will lead the charge.